by Adrian Nathan West

Achille Mbembe, Critique of Black Reason. Duke University Press, 2017.

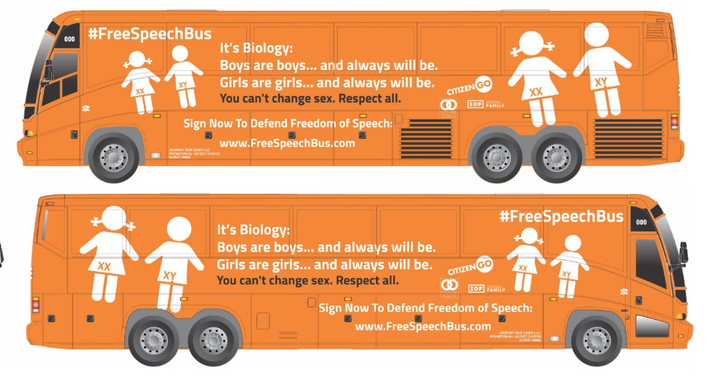

The question of Blackness, of what it might or ought to mean, lies at the fault line between timeworn notions of race as a biological destiny, rechristened for the resurgent right as “human biodiversity” or “racial realism,” and identity theory in its various permutations. If the first is generally acknowledged to lack scientific fundament, the second’s refusal to dispense entirely with race, coupled with its difficulty in establishing strict criteria as to who may and may not claim a given ethnicity, has provided fodder for advocates of dubious identitarian positions from transracialism to White Lives Matter. Yet the alternative of avowed post-racialism has frequently served as a cover for the diminishment of the historical suffering of marginalized groups or an excuse for the advocacy of policies that work to these groups’ detriment. Amid the snares of these various approaches, is a different thinking of Blackness possible?

This is the question posed by Cameroonian philosopher Achille Mbembe, whose impressive body of work has yet to achieve the prominence it deserves in the English-speaking world. Critique of Black Reason, his most accomplished book to date, opens by positing Blackness as a historical conception of a kind of being, neither entirely subject not object, elaborated over the course of three successive phases: first, the “organized despoliation of the Atlantic slave trade,” which transformed black flesh into “real estate,” in the words of a 1705 declaration of the Virginia Assembly, and led to the codification of racial difference following Bacon’s Rebellion in the seventeenth century; second, the birth of black writing, which Mbembe traces from the late eighteenth century, as an examination of blacks’ condition as “beings-taken-by-others” to its culmination in the dismantlement of segregation and apartheid; and third, the confluence of market globalization, economic liberalization, and technological and military innovation in the early twenty-first century (Mbembe 2017, 2-4). In this last, grim episode, the concentration of capital following the saturation of global markets, accompanied by processes of efficiency maximization, has led to the seclusion of the black subject on the irrelevant fringes of society and to a consequent “production of indifference,” or “altruicide,” that helps render this barbarity psychologically cost-efficient for the privileged (Mbembe 2017, 3). “If yesterday’s drama of the subject was exploitation by capital,” he states, “the tragedy of the multitude today is that they are unable to be exploited at all. They are abandoned subjects, relegated to the role of a ‘superfluous humanity.’ Capital hardly needs them any more to function” (Mbembe 2017, 3; 11). This novel arrangement, according to Mbembe, has given rise to “new imperial practices” that tend toward a universalization of the black condition that he refers to as the “Becoming Black of the world” (Mbembe 2017, 6).

Mbembe defines race as a system of images tailored to the demands of rapacity that forestalls any encounter with an authentic subject. The briefest glance at the early literature of African exploration, from Jobson to Olfert Dapper, shows the extent to which fantasy superseded reality and public desire for the salacious and hair-raising left little room for ethnographic rigor. These accounts dished up the archetypes of those tropes of sloth, intellectual inferiority, wiliness, and concupiscence that remain in vigor even today and that would provide eventual justification for colonialism and enslavement. “To produce Blackness is to produce a social link of subjection and a body of extraction,” Mbembe affirms, and, being constituted far in advance of any earnest investigation of African history, sociology, or folkways, these primitive notions of Black life responded less to enlightened curiosity than to the question of “how to deploy large numbers of laborers within a commercial enterprise that spanned great distances” as a “racial subsidy” to the expanding plantation system (Mbembe 2017, 20).

The question of Black reason proposed by the book pertains, first of all, to the body of knowledge concerning things and people “of African origin” that came to stand in for primary experience thereof and served as “the reservoir that provided the justifications for the arithmetic of racial domination” (Mbembe 2017, 27). Black reason categorized its more or less willfully misunderstood African subjects through a series of exemptions to normalcy that mutated in conformity with the scientific reasoning of successive eras, leaving them morally and juridically illegitimate, unfit for human endeavor, suited only to forced labor. The traces of Black reason, according to Mbembe, persist in the search for Black self-consciousness, the founding gesture of which is the question: “Am I, in truth, what people say I am?” (Mbembe 2017, 28). Against this grappling with the oppressive image of Blackness, Mbembe will propose, in the book’s later chapters, an engagement with tradition for the sake of a “truth of the self no longer outside the self,” with Aimé Césaire, Amos Tutuola, Frantz Fanon, and others as his guides (Mbembe 2017, 29).

A brief caveat concerning language is in order. Translators from French frequently grapple with the word nègre, and the results are rarely ideal. It remains common in standard French as a term for ghostwriter (nègre) and in the now off-color expression travailler comme un nègre (equivalent to the once-current work like a nigger in English), but when used disparagingly, there is no doubt as to its intent. Mbembe’s title, and his use of nègre throughout the book, are not meant to be provocative, but they do draw on a long tradition of recalcitrant self-assertion embodied in Aimé Césaire’s famous phrase, “Nègre je suis, nègre je resterais,” which has its complement in the reappropriation of the word nigger by H. Rap Brown, Dick Gregory, and others (Césaire, 28). All this is elided in Laurent Dubois’s excellent translation; in his defense, there is no happy alternative.

For Mbembe, the racialization of consciousness takes root with the legal effort to distinguish the greater rights and privileges owed to European indentured servants with respect to their African slave counterparts in America in the 17th and 18th centuries. With the growth of scientific racism and its subsequent application to African peoples in the heyday of colonialism, which coincided with the “capitulation to racism” in the southern states as described by C. Van Woodward, Blackness was reconstituted as an Außenwelt or “World-outside” in the Schmittian sense: a zone in which enmity was paramount, rapine permissible, and the supposition of reciprocity suspended (Woodward, 67-110). Though the rationalization of scientific racism granted the field an appearance of objectivity, as an ideology racism was never independent of “the logic of profit, the politics of power, and the instinct for corruption” (Mbembe 2017, 62). This is evident from the massive wave of resettlements starting in the 1960s in South Africa, the organized attacks on Black businesses and appropriation of black-owned lands in the United States after the Civil War, and the introduction of foreign land ownership in Haiti under Roosevelt. Whether predicated on Blacks’ irreparable inferiority, in the American case, or on the civilizing mission, in the French, the principle of race, as a “distinct moral classification,” educated the populace in “behaviors aimed at the growth of economic profitability” (Mbembe 2017, 73; 81).

In the book’s second half, Mbembe turns from racism as such to an eloquent account of the development of Black self-consciousness, which necessarily takes over and diverts the discourse of race:

The latent tension that has always broadly shaped reflection on Black identity disappears in the gap of race. The tension opposes a universalizing approach, one that proclaims a co-belonging to the human condition, with a particular approach that insists on difference and the dissimilar by emphasizing not originality as such but the principle of repetition (custom) and the values of autonomy […] We rebel not against the idea that Blacks constitute a distinct race but against the prejudice of inferiority attached to the race. The specificity of so-called African culture is not placed in doubt: what is proclaimed is the relativity of cultures in general. In this context, ‘work for the universal’ consists in expanding the Western ratio of the contributions brought by Black ‘values of civilization,’ the ‘specific genius’ of the Black race, for which ‘emotion’ in particular is considered the cornerstone (Mbeme 2017, 90).

This notion of a peculiar set of Black values has informed the praxis of evocation essential to Pan-Africanist thinkers from Edward Blyden to Marcus Garvey, whose thought has special importance for Mbembe despite his own universalist principals. While praising the aptitude of what he terms “heretical genius” to promote a self-sustaining understanding of the African condition, Mbembe admits that this understanding itself is a falsification to be transcended (Mbembe 2017, 102).

For Mbembe, Blackness resides above all in the individual’s inscription in the Black text, his term for the accrual of the collective recollections and reveries of people of African descent, at the core of which lies the memory of the colony. Memory he defines as “interlaced psychic images” that “appear in the symbolic and political fields,” and in the Black text, the primordial memory is the divestment of the subjectivity of the Black self (Mbembe 2017, 103). His discussion of Black consciousness in the colonial setting leans heavily on Frantz Fanon, particularly The Wretched of the Earth and Black Skin, White Masks. Here again, Mbembe’s dialectical proclivities show forth: while looking toward a future in which a new humanism might divest historical memory of the trappings of race, he pays homage to thinkers who insisted on the necessity of a specifically Black memory to combat colonial distortions; at the same time, without advocating violence, he quotes Fanon approvingly to the effect that violence, for the colonized, may prove the lone recourse able to establish reciprocity between oppressor and oppressed.

Since his 2006 essay Necropolitics, death has been the axis on which Mbembe’s political philosophy turns, and its invocation in Critique of Black Reason makes for some of the book’s most resonant as well as exasperating passages. Mbembe is no doubt correct in positing the border between the capacity of the sovereign to kill and that of the subject to bring his own life to an end as the furthermost border and defining conflict of the experience of subjection, and its relevance is beyond debate in the political discourse of the present day, especially as it impends upon those regions of the world where weakened civil institutions have allowed for a merging of private and public power and their subordination to moneyed interests. Yet his frequent linkage of these considerations to theoretical concepts of French coinage is at times belabored. In particular, the concept of the remainder (le reste), the heritage of which stretches from Saussure to Derrida and Badiou, and which Mbembe relates to the Black subject’s life-in-death through Bataille’s notion of the accursed share, muddies rather than illuminates Mbembe’s ordinarily lucid prose.

Mbembe concludes Critique of Black Reason with an examination of the prospects for overcoming the idea of Blackness in the service of a world freed of the burden of race, yet unbothered by difference and singularity. Considering négritude as a moment of “situated thinking,” borne of and proper to the lived experience of the racialized subject, he calls for the contextualization of Blackness within a theory of “the rise of humanity” (Mbembe 2017, 161; 156). Blackness, in the positive sense Mbembe’s favored thinkers impart to it, would then become “a metaphysical and aesthetic envelope” directed against a specific and historically bound set of degradations and toward a humanism of the future; and the question that emerges for all liberatory struggles is “how to belong fully in this world that is common to all of us” (Mbembe 2017, 176). It is curious to read his sanguine meditations on the dialectical transcendence of Blackness against the collapse of the ideal of a post-racial America, particularly toward the end of Obama’s second term, and the re-legitimation of overt racism in the Trump era. Whereas Mbembe largely dispenses with the fictions of race to conceive of Blackness in political terms, present-day racism on the right has held onto the Black person as a biological subject while discrediting the political implications of ethnicity. Mbembe is not blind to the consequences of this decoupling of ethnic origin and political status, which has given impetus both to the annulment of protections afforded to African-Americans as well as to a revival of stereotypes of thugs and welfare cheats whose alleged malfeasance is undetermined by history or circumstance: he decries post-racialism as a fiction, advocating instead for a “post-Césairian era [in which] we embrace and retain the signifier ‘Black’ not with the goal of finding solace within it but rather as a way of clouding the term in order to gain distance from it” (Mbembe 2017, 173).

Increasingly, the diffusion of Blackness into the common human heritage may devolve as much from oppression and destitution as from any humane disposition toward fraternity. In his 1999 essay “On Private Indirect Government,” Mbembe spoke ominously of the “direct relation that exists between the mercantile imperative, the upsurge in violence, and the installation of private military, paramilitary, or jurisdictional authorities” (Mbembe 1999). Mbembe was writing specifically of Africa, and well in advance of the economic crisis in the West that permitted an unheralded transfer of wealth into the hands of a minuscule proportion of the global elite. Since then, Americans have witnessed a newly militarized police force engaged in profiteering via civil asset forfeiture, the yields of which now exceed total losses from burglary; at the same time, privatization of education, public services, and infrastructure has made a growth industry of what once were thought of as human rights. The escalation process of capital mentioned by Mbembe in the book’s preface has meant that the citizens of countries that once dictated the brutal debt adjustment regimes imposed on the Third World now find themselves burdened by austerity as the global periphery expands and the core grows ever more restricted.

In the epilogue, “There is Only One World,” Mbembe characterizes the progressive devaluation of the forces of production, along with the reduction of subjectivity to neurologically fixed and algorithmically exploitable market components, recently criticized by philosophers such as Byung-Chul Han, as a not-yet complete “retreat from humanity” to which he opposes “the reservoirs of life” (Mbembe 2017, 179; 181). For Mbembe, this situation confronts the individual with the most basic existential questions: what the world is, what are the extant and ideal relations between the parts that compose it, what is its telos, how should it end, how should one live within it. To conceive of a response, it is necessary to embrace the “vocation of life” as the basis for all thinking about politics and culture (Mbembe 2017, 182). Mbembe closes with a layered reference to “the Open,” which draws on Agamben, Heidegger, and finally Rilke to specify a state of plenitude, an absence of resentment, in which a care is made possible beyond the distortions of abstraction through the acknowledgement and inclusion of the no-longer other. The “proclamation of difference,” he concludes, “is only one facet of a larger project––the project of a world that is coming” (Mbembe 2017, 183).

Adrian Nathan West is author of The Aesthetics of Degradation and translator of numerous works of contemporary European literature.

Bibliography

Césaire, Aimé. Nègre Je Suis, Nègre Je Resterai. Entretiens avec François Vergès. Paris: Albin Michel, 2013.

Mbembe, Achille, Critique of Black Reason, trans. Laurent Dubois. Durham, NC: Duke University Press, 2017.

Mbembe, A. 1999. “Du Gouvernement Privé Indirect.” Politique Africaine, 73 (1), 103-121. doi:10.3917/polaf.073.0103.

Woodward, C. Vann. The Strange Career of Jim Crow. New York: Oxford University Press, 1955.

Works Consulted

Agamben, Giorgo. L’aperto. L’uomo e l’animale. Turin: Bollati Boringhieri, 2002.

Dapper, Olfert. Umbständliche und eigentliche Beschreibung von Afrika, Anno 1668. https://archive.org/details/UmbstandlicheUndEigentlicheBeschreibungVonAfrikaAnno1668

Fanon, Frantz. The Wretched of the Earth, trans. Richard Philcox. New York: Grove Press, 2004.

Fanon, Frantz. Black Skin, White Masks, trans. Richard Philcox. New York: Grove Press, 2007.

Feldman, Ari. “Human Diversity: the Pseudoscientific Racism of the Alt-Right. Forward, 5 August 2016. http://forward.com/opinion/national/346533/human-biodiversity-the-pseudoscientific-racism-of-the-alt-right/

H. Rap Brown. Die Nigger Die. New York: Dial Press, 1969.

Han, Byung-Chul. Im Schwarm. Berlin: Matthes & Seitz, 2013.

Ingraham, Christopher. “Law Enforcement Took More Stuff from People than Burglars Last Year.” Washington Post, 23 February 2015.

Hallett, Robin. “Desolation on the Veld: Forced Removals in South Africa.” African Affairs 83, no. 332 (1984): 301-20. http://www.jstor.org/stable/722350.

Heidegger, Martin. “Wozu Dichter?” in Gesamtausgabe Band 5: Holzwege. Frankfurt: Vittorio Klostermann, 1977.

Jobson, Richard. The Golden Trade. 1623. http://penelope.uchicago.edu/jobson/

Renda, Mary A. Taking Haiti: Military Occupation and the Culture of U.S. Imperialism, 1915-1940. Chapel Hill: University of North Carolina Press, 2001.

Rilke, Rainer Maria. Duineser Elegien. Leipzig: Insel, 1923.

Rucker, Walter, and James Nathaniel Upton, eds. Encyclopedia of American Race Riots. Westport, Connecticut and London: Greenfield Press, 2007.

Schmitt, Carl. Politische Romantik. Munich and Leipzig: Duncker and Humblot, 1919.